Session Information

Session Type: Poster Session A

Session Time: 10:30AM-12:30PM

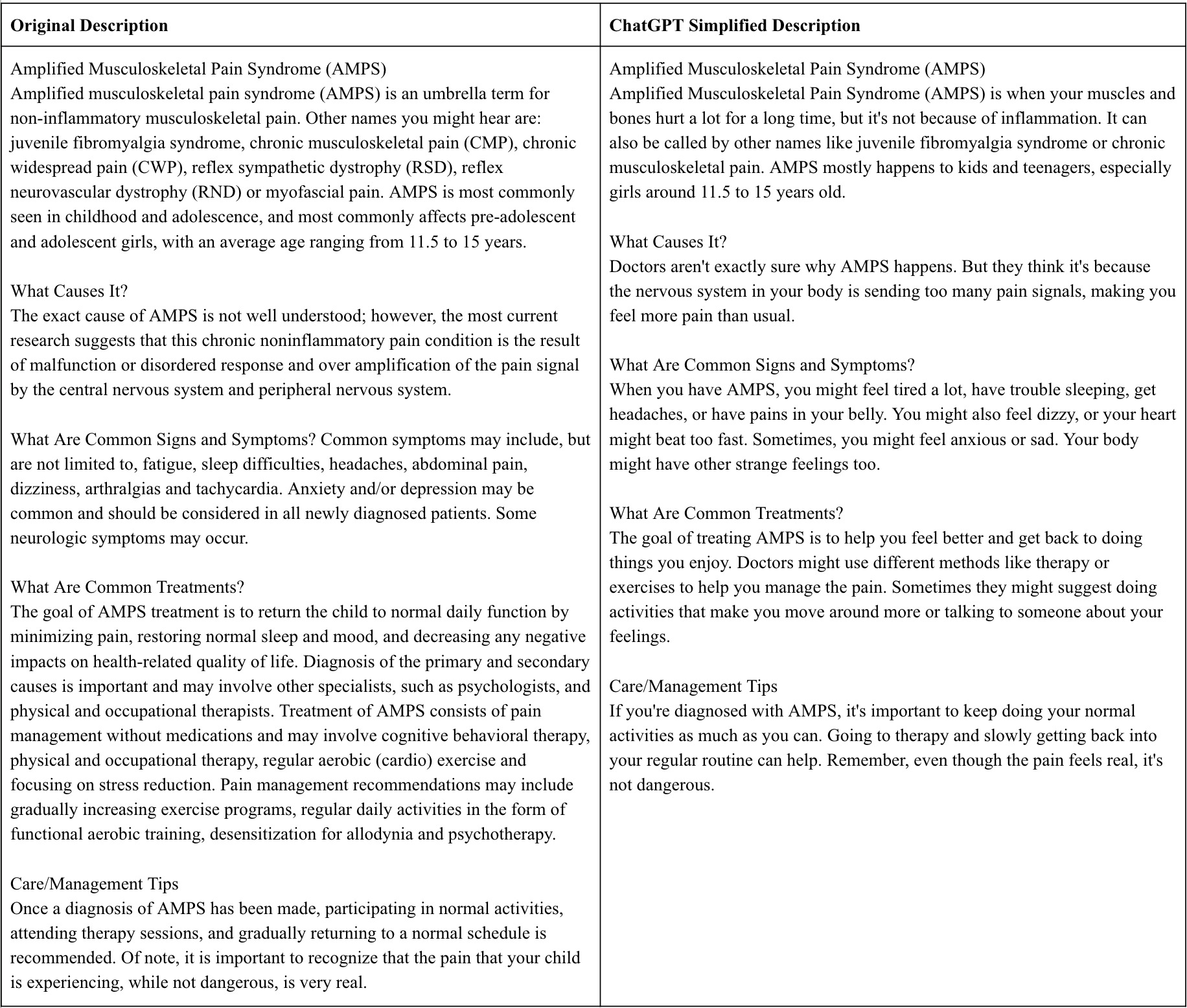

Background/Purpose: Patient education materials are an important resource to improve health education. According to the American Medical Association (AMA), education materials should not be written above a 6th grade reading level to be understood by the public1. We aim to evaluate the readability of the American College of Rheumatology (ACR) patient education materials and use ChatGPT 3.5 to modify the reading level of the education materials to a 6th grade reading level.

Methods: Four validated readability assessment tests were selected to assess the readability levels for 96 online ACR patient education materials on rheumatic conditions and treatments2,3: the Gunning Fog Index (GFI), Flesch-Kincaid Grade Level (FKGL), Coleman-Liau Index (CLI) and Simple Measure of Gobbledygook Index (SMOG). In addition to the above tests, a validated online readability software also provided an average reading level consensus and mean reading age based on eight popular reading level formulas4. Mean, medial interquartile range, standard deviation (SD) for each of the scores were calculated. All education materials were inputted into ChatGPT with the prompt “Rewrite the following text at a 5th grade reading level:” to rephrase each portion of text to the desired 6th grade reading level. The 5th grade reading level was selected as prior research has shown that prompting ChatGPT to reformulate text at this level better approximates the desired reading level5. The output was reviewed by the authors to ensure accuracy and valid syntax. These converted excerpts were evaluated for readability using the online readability scoring software4.

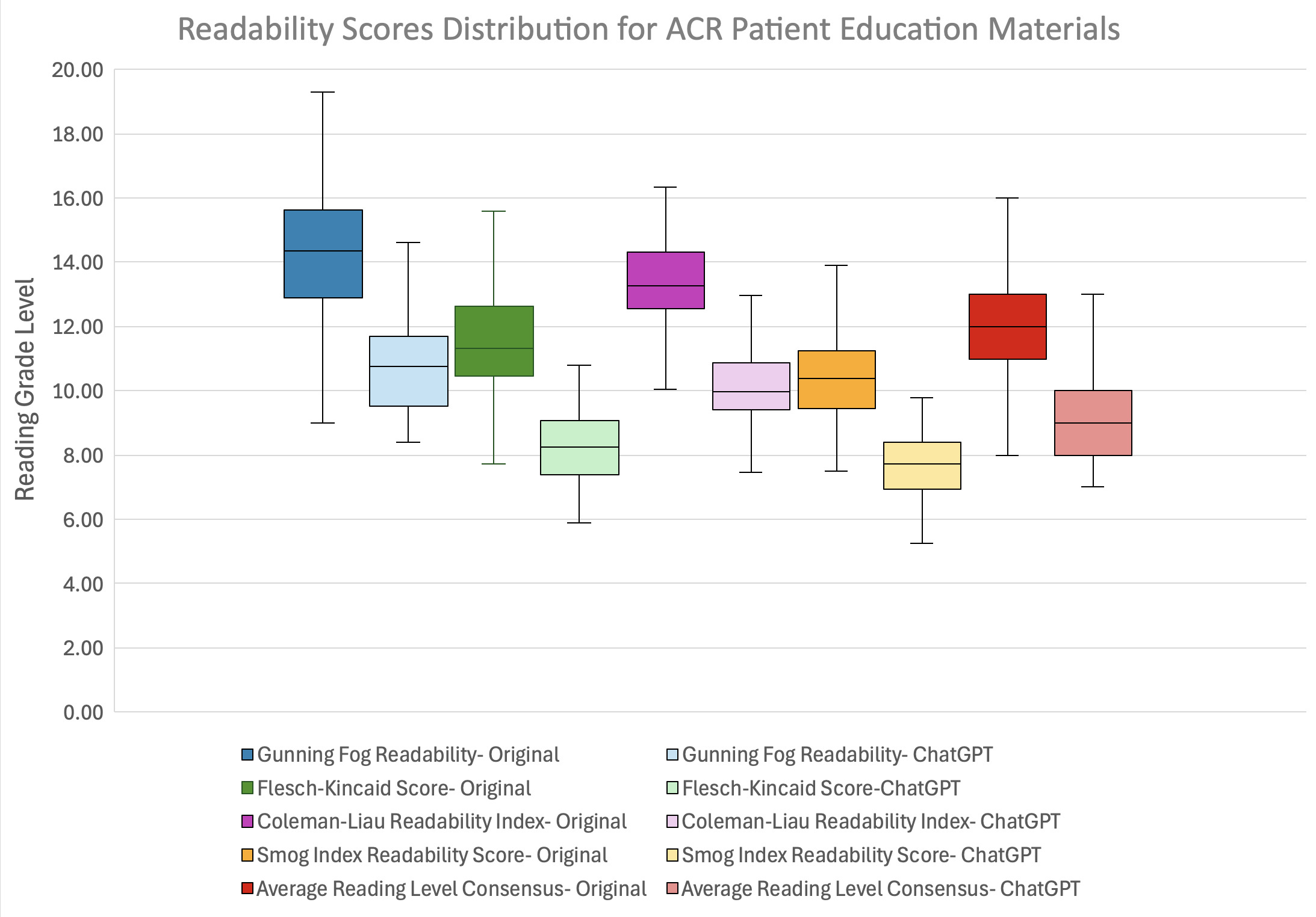

Results: The mean (± SD) readability ratings of GFI, FKGL, CLI, and SMOG from the 96 original ACR patient education materials were 14.24 (1.85), 11.50 (1.74), 13.31 (1.42), 10.33 (1.29), respectively. Each of the ACR materials had average reading level scores beyond 8th grade level, with a mean of 12th grade level (mean 12.02 ± 1.35). After the ChatGPT prompt, the calculated average reading level for the simplified education materials ranged from grades 7 to 13 with a mean of 9.35 ± 1.12. The mean (± SD) readability ratings of GFI, FKGL, CLI, SMOG for the simplified versions were 10.70 (1.39), 8.29 (1.21), 10.18 (1.11), and 7.68 (1.05), respectively.

Conclusion: The readability level of all the ACR patient education materials is above the recommended reading level by the AMA. Although ChatGPT was able to reduce the reading level of the materials significantly, additional analysis revealed that it was unable to reduce the reading level to the desired 6th grade level. Further research is warranted to refine the use of ChatGPT or other AI tools in reframing patient information accurately. We support the ACR’s efforts to develop patient education materials but recommend simplifying and improving readability of the materials, aiming for a 6th grade reading level.

To cite this abstract in AMA style:

Nguyen Q, Moss A, Iyer P. Readability Analysis of the American College of Rheumatology Patient Education Material [abstract]. Arthritis Rheumatol. 2024; 76 (suppl 9). https://acrabstracts.org/abstract/readability-analysis-of-the-american-college-of-rheumatology-patient-education-material/. Accessed .« Back to ACR Convergence 2024

ACR Meeting Abstracts - https://acrabstracts.org/abstract/readability-analysis-of-the-american-college-of-rheumatology-patient-education-material/