Session Information

Session Type: Poster Session B

Session Time: 10:30AM-12:30PM

Background/Purpose: Rheumatologic emergencies are severe, with mortality rates up to 50% even with timely intervention. Associated morbidity includes permanent organ failure and significant disability. This study explores the potential of several AI chatbots, including functionality which leverages natural language processing and machine learning, to recognize emergent rheumatologic situations.

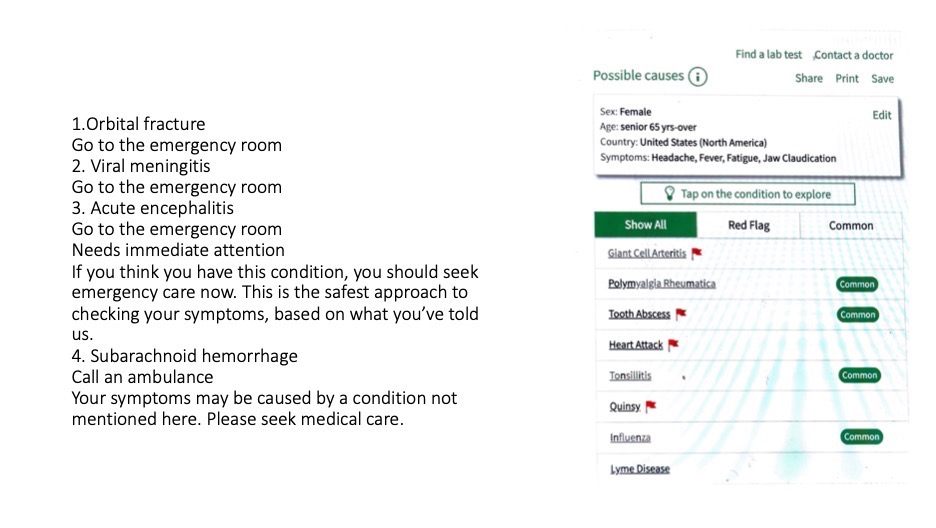

Methods: We selected seven AI healthcare chatbots based on whether they were freely accessible, focused on medical symptoms, and were capable of providing diagnostic triage (Table 1). A comprehensive literature review identified common rheumatologic emergencies and identified published cases that could serve as standards for comparing diagnostic accuracy across different AI apps. Eight distinct use cases were developed, tested across all seven chatbots resulting in 56 total outcomes. The primary outcomes were the accuracy of primary diagnosis assigned by the chatbot (yes/no) and the effectiveness of triage (yes/no). Appropriate diagnosis was assessed based on the alignment of the chatbot’s response with established standards for each case. Effective triage was evaluated by comparing the chatbot’s recommendations with recognized urgency levels for each scenario. Chatbots were also evaluated based on whether they could be integrated with health systems, were multilingual, provided differential diagnosis, and elicited past medical history (Table 1). Two clinicians independently adjudicated each scenario to assess the accuracy of diagnosis and the effectiveness of triage; disagreements were resolved by discussion. An example scenario is provided in Figure 1.

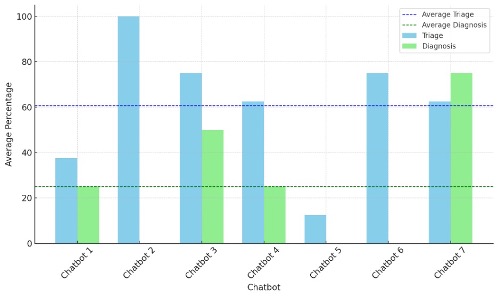

Results: The AI chatbots demonstrated a range of performance levels in diagnosing rheumatologic emergencies. Figure 1 shows examples of two chatbot’s output in response to a case of Giant Cell Arteritis. Out of the 56 scenarios tested, the overall diagnostic accuracy was 28.5%, indicating less than a third of the chatbot-generated diagnoses matched the correct diagnosis. The accuracy varied significantly with some showing higher proficiency in recognizing certain conditions than others. In terms of triage recommendations, the chatbots performed better, with an overall triage success rate of 62.5% (Figure 2). However, there were notable instances where the chatbots either underestimated the severity of the condition or provided overly cautious recommendations. All chatbots had capacity to integrate in the health system, only 2 were multilingual, and 5 provided differential diagnoses. Few were able to elicit past medical history (Table 1).

Conclusion: Seven common AI healthcare chatbots had an average diagnostic accuracy of 28.5% and a triage success rate of 62.5%. They showed potential for early triage of rheumatologic emergencies but had significant failure rates in terms of suggesting the correct diagnosis. It is likely that limited training on rheumatic diagnoses contributes to failure to identify these conditions, inconsistent symptom recognition, and providing general rather than specific diagnoses. Future work should focus on training AI chatbots on medical data that is enriched with rheumatologic diagnoses to see if performance improves.

Accessibility features, educational resources, and integration with healthcare systems are available in all chatbots

Symptom analysis and tirage; Able to analyze symptoms and triage

Multilingual; Other languages apart from English

Past Medical History: Limited means asks questions about some chronic diseases

A 75-year-old woman presents with fever, fatigue, malaise, severe headache in both temples, and discomfort while chewing food for about one week. She also experienced an isolated episode of transient diplopia a few days ago. Her temperature was 37.8°C (100.1°F). Diagnosis: Giant Cell Arteritis, Triage recommendations: ER.

`

To cite this abstract in AMA style:

Fadairo-Azinge A, Yazdany J, Sakumoto M. AI –Driven Precision in Rheumatologic Emergencies: Unveiling the Capability of Top Healthcare Chatbots [abstract]. Arthritis Rheumatol. 2024; 76 (suppl 9). https://acrabstracts.org/abstract/ai-driven-precision-in-rheumatologic-emergencies-unveiling-the-capability-of-top-healthcare-chatbots/. Accessed .« Back to ACR Convergence 2024

ACR Meeting Abstracts - https://acrabstracts.org/abstract/ai-driven-precision-in-rheumatologic-emergencies-unveiling-the-capability-of-top-healthcare-chatbots/