Session Information

Session Type: Poster Session C

Session Time: 10:30AM-12:30PM

Background/Purpose: In this work, we study the ability of generative artificial intelligence (AI) based tools to diagnose pediatric rheumatological diseases. Specifically, we seek to answer two questions: 1. What is the impact of symptomatology on the robustness of output provided by AI tools? 2) What is the impact of non-technical terminology on the diagnostic accuracy of AI tools, and could it be safe for self-diagnosis? We examined the ability of AI to diagnose four connective tissue diseases: juvenile dermatomyositis (JDM), lupus, systemic sclerosis (SSc), and mixed connective tissue disease (MCTD).

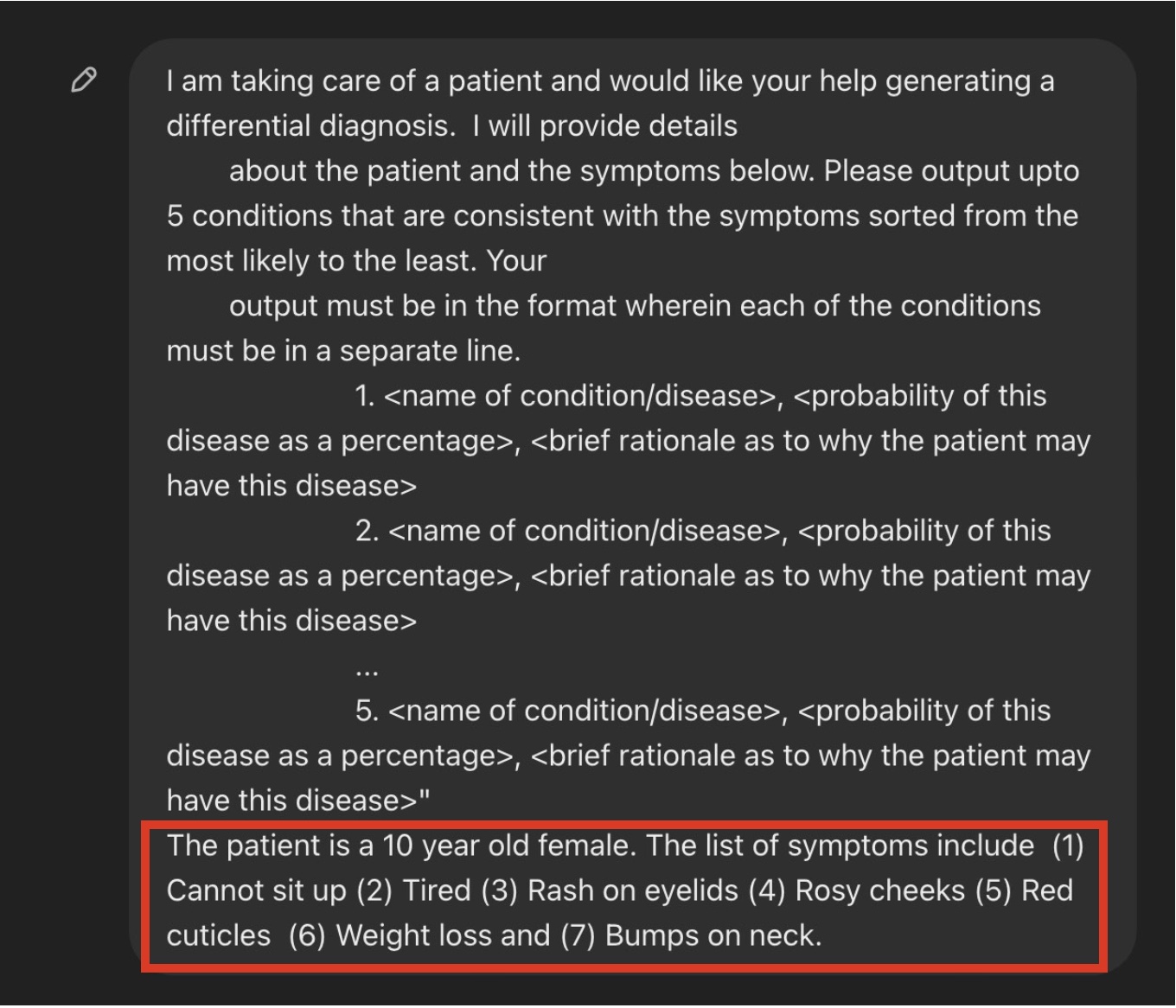

Methods: We compared the two large language models, ChatGPT 3.5 and Claude Sonnet. Both systems were provided with a standardized prompt with variable symptom list. We obtained lists of common symptoms for these diseases from a pediatric rheumatology textbook (Cassidy and Petty). These symptom lists came with an associated probability. We then generated a symptom list based on these probabilities and input them into the program using the standardized prompt. We generated 250 symptom lists using textbook descriptions of the symptoms, and 1000 symptom lists with non-medical language for each of the four disorders we studied. We then parsed the output to determine if AI was able to generate the correct diagnosis.

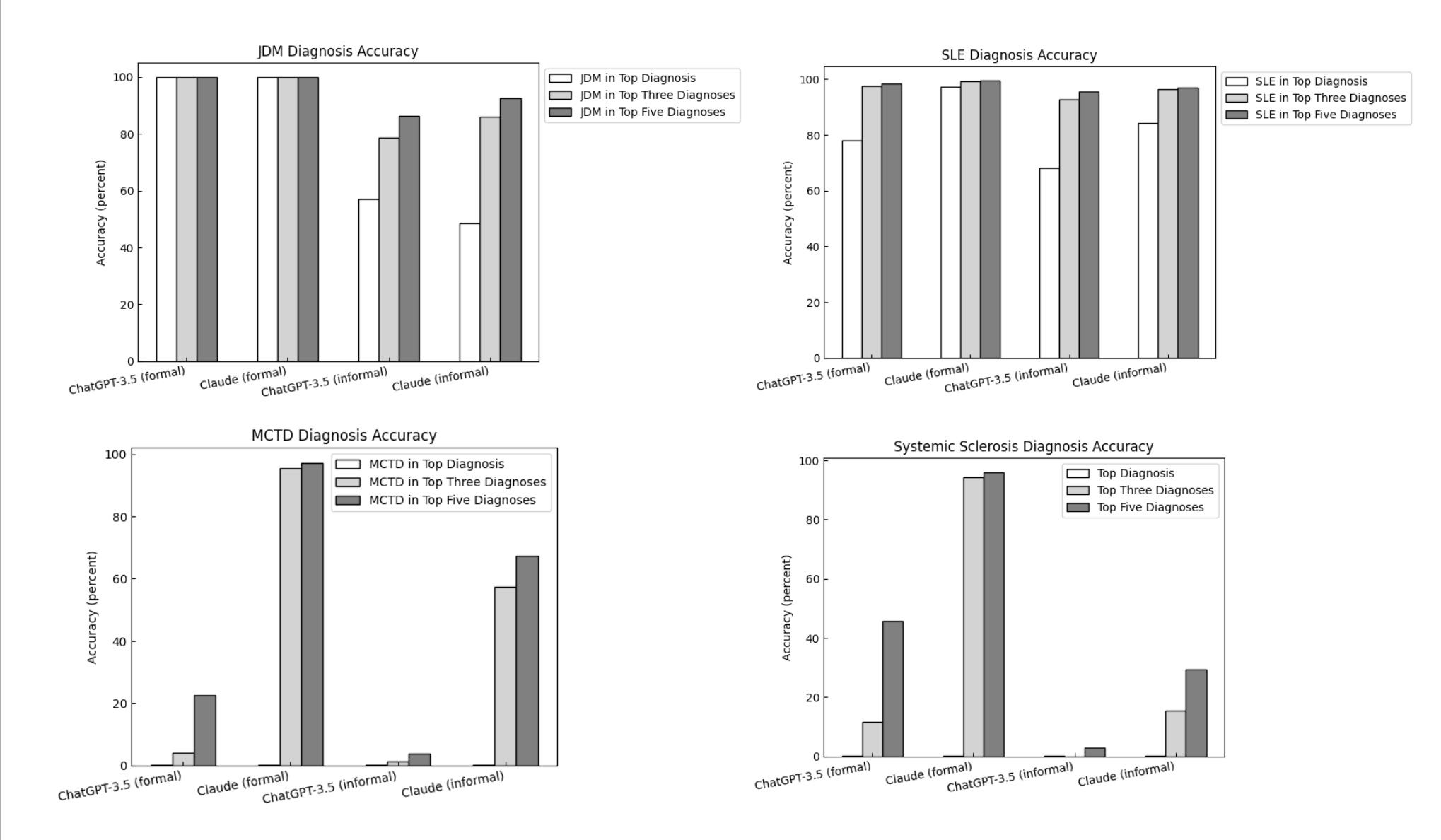

Results: The tools were better at diagnosing conditions when presented with prompts containing standard medical terminology. This is likely related to the presence of this information in the sources used to train these models. AI tools were also able to diagnose diseases with pathognomonic findings more readily than those with more subtle findings. Specifically, any prompt containing “Gottron’s papules” consistently leads to a diagnosis of juvenile dermatomyositis.

The diagnostic accuracy of the tools dropped significantly when they were provided with colloquial language. This suggests that they are not able to provide accurate diagnoses when they are not given strictly clinical language.

The tools consistently included rheumatological diagnoses within their top five diagnoses for most prompts, even if provided with non-medical terminology. This indicates that they may assist with triggering the correct referral. Claude Sonnet appeared to outperform ChatGPT, so it may draw from a different collection of medical information.

Conclusion: We examined the ability of AI to diagnose four pediatric rheumatological diseases accurately (JDM, lupus, MCTD, and SSc). We noted that AI is more likely to diagnose diseases accurately when there are clear pathognomonic findings (i.e. Gottron’s papules). We determined that the diagnostic accuracy of AI drops when it is presented with non-medical terminology, suggesting that it is drawing information primarily from medical sources. As a result, AI may not be effective nor safe for individuals who are attempting to self-diagnose. Further improvement is required in these AI systems before they can be safely used by clinicians for diagnostic support.

To cite this abstract in AMA style:

Sriram I, Tarshish G, Curran M. Accuracy of AI Tools for Diagnosis of Connective Tissue Disease [abstract]. Arthritis Rheumatol. 2024; 76 (suppl 9). https://acrabstracts.org/abstract/accuracy-of-ai-tools-for-diagnosis-of-connective-tissue-disease/. Accessed .« Back to ACR Convergence 2024

ACR Meeting Abstracts - https://acrabstracts.org/abstract/accuracy-of-ai-tools-for-diagnosis-of-connective-tissue-disease/