Session Information

Date: Sunday, November 10, 2019

Title: Education Poster

Session Type: Poster Session (Sunday)

Session Time: 9:00AM-11:00AM

Background/Purpose: The Pennsylvania Rheumatology Objective Structured Clinical Examination (ROSCE) is an annual assessment of rheumatology fellows’ communication skills. Fellows complete several standardized patient (SP) encounters involving complex communication issues within rheumatology. We revised our assessment tools to align with Accreditation Council of Graduate Medical Education (ACGME) internal medicine subspecialty milestone areas and compare fellow performance across cases. In this study, we evaluated the comparability of the fellow and faculty ROSCE evaluations within ACGME competency domains.

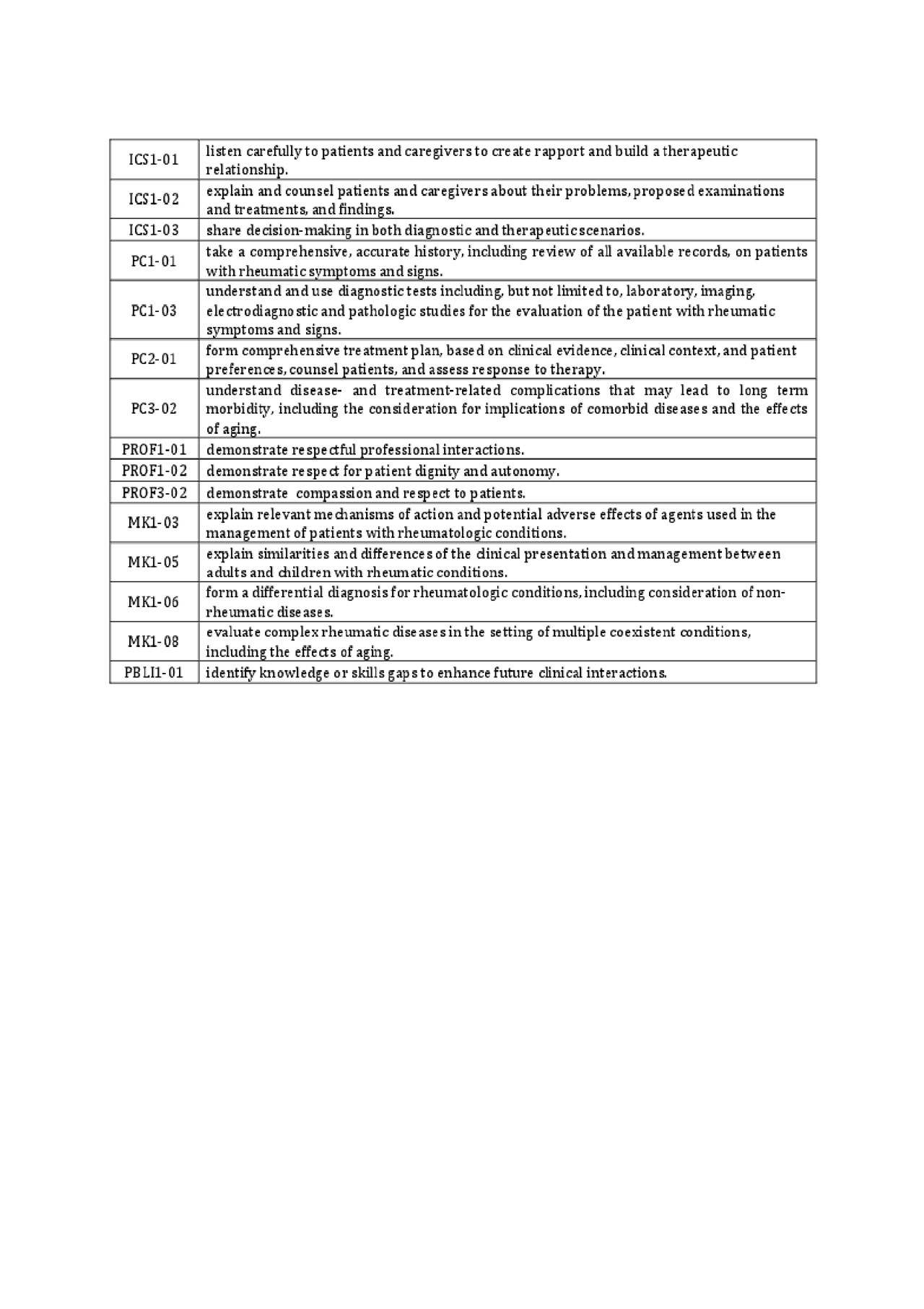

Methods: 16 trainees from 8 rheumatology programs participated in 7 SP scenarios and were observed by faculty raters. Content validity was determined by consensus of 10 rheumatology fellowship program directors. We recorded faculty and fellow feedback using previously developed 9-point Likert scale instruments. To ensure response process validity, faculty raters were trained on scoring forms prior to the ROSCE; scores were recorded electronically and transferred to Excel for analysis. Fellows completed self-assessment forms afterwards. Items were mapped to milestone categories in the ACGME competencies of medical knowledge (MK), interpersonal and communication skills (ICS), patient care (PC), practice based learning and improvement (PBLI) and professionalism (PROF) (see figure 1). Narrative comments were also recorded.

Results: 16 faculty and 16 fellow feedback forms from 7 ROSCE cases were analyzed. 15 MK, 48 ICS, 9 PC, 1 PBLI, and 26 PROF questions (99 total) were scored across all cases, corresponding to 15 rheumatology milestones. Overall, average faculty and fellow ratings on milestone categories as well as grouped by competency domains were comparable; however, fellow and faculty ratings were generally poorly correlated except with respect to medical knowledge (r=0.67 for MK1-03, r= 0.70 for MK1-08). Faculty ratings tended to be higher than fellows, most prominently in the MK and PROF domains overall, and in the MK1-03, PROF3-02, PC2-01, and PC1-03 milestones specifically (see figure 2).

Narrative comments from faculty (n=224) largely pertained to use of medical jargon (26%), clarity of explanation (12%), and knowledge base (12%). 24% of fellow comments (n=112) pertained to difficulty of the case, while 33% pertained to areas that they had forgotten during the SP encounter.

Conclusion: Faculty and fellow evaluations of performance were similar in each ACGME competency though fellows tended to self-rate lower than faculty. Correlation between faculty and fellow ratings was generally low, except with regard to more “objective” milestones in medical knowledge; this is likely due to a relatively small spread in ratings of communication skills. We plan to further evaluate validity of our ROSCE and revise our assessments to reflect milestone achievement levels. We hope in future to evaluate correlation of competency evaluation in the ROSCE with program director assessment of fellows.

ROSCE 2018 abstract fig 1 table

To cite this abstract in AMA style:

Jayatilleke A, Denio A. Evaluation of ACGME Competencies in a Rheumatology Observed Structured Clinical Examination [abstract]. Arthritis Rheumatol. 2019; 71 (suppl 10). https://acrabstracts.org/abstract/evaluation-of-acgme-competencies-in-a-rheumatology-observed-structured-clinical-examination/. Accessed .« Back to 2019 ACR/ARP Annual Meeting

ACR Meeting Abstracts - https://acrabstracts.org/abstract/evaluation-of-acgme-competencies-in-a-rheumatology-observed-structured-clinical-examination/