Session Information

Session Type: Poster Session (Tuesday)

Session Time: 9:00AM-11:00AM

Background/Purpose: Contemporary technologies offer potential solutions to improve and automate data collection of randomized clinical trials by transitioning assessments from the clinic to the real-world. Mobile health (mHealth) applications (Apps) associated with smartphone embedded sensors offer the possibility to automate objective physical function tests by tracking, recording, and analyzing quantitative participant data. An automated mHealth app brings to clinicians and researchers the opportunity to reliably monitor physical function at more frequent intervals within a real-world setting. To create this tool, we collected preliminary data to establish the test-retest reliability and validity of an automated mHealth app to assess performance on a 30-second chair-stand test when compared with the gold standard assessment technique.

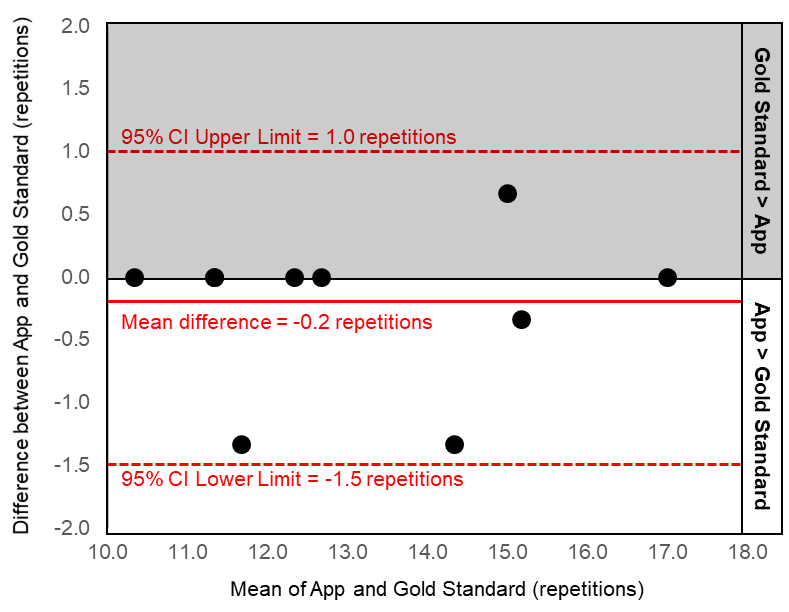

Methods: We recruited 10 healthy individuals to participate in two data collection sessions separated by 7 days. Individuals were at least 21 years old, able to comfortably walk 20 meters without an assistive device, and had an iPhone 5 or higher. We developed a mHealth App that uses algorithms associated with the smartphone’s embedded motion sensors (gravitometer and accelerometer) to automate the count of the repetitions during the 30-seconds chair-stand test. During the test, the participant’s smartphone was positioned inside an elastic strap at the chest level. Participants performed three trials in which they completed as many repetitions of standing and sitting from a standard chair during 30 seconds. For each trial, the mHealth app counted the number of repetitions completed by the participants. During the same three trials, an assessor manually counted the number of repetitions as the gold standard for assessing performance. The number of repetitions between the three trials was averaged for the mHealth app and the gold standard. Bland-Altman plots and Intraclass Correlation Coefficients (ICC) were used to establish agreement and inter-rater reliability between the gold standard and the mHealth app, respectively. ICCs were used to assess test-retest reliability between the two different sessions for the gold standard and the mHealth app.

Results: The majority of our sample was female (80%) with a mean ± standard deviation age of 30 ± 7 years, and body mass index of 24.7 ± 5.4 kg/m2. The Bland-Altman plots demonstrate good agreement between the gold standard test and the mHealth app since no data points fell outside the 95% limits of agreement (upper limit:1.0 and lower limit -1.5 chair stands; Figure 1). Additionally, there is excellent reliability between the gold standard and the mHealth app (ICC2,k = 0.98). When comparing across the two different sessions, the gold standard (ICC2,k = 0.93) and mHealth app (ICC2,k = 0.89) demonstrate similar test-retest reliability.

Conclusion: The results suggest that our mHealth app is a reliable and valid tool to objectively quantify performance during the 30-second chair-stand test in healthy individuals. This data will lay the foundation for further development of algorithms to automate other objective physical function tests, as well as future implementation in clinical trials.

To cite this abstract in AMA style:

Dantas L, Harkey M, Dantas A, Price L, Driban J, McAlindon T. Test-Retest Reliability and Validity of a Mobile Health Application to Automate the 30 Seconds Chair Stand Test – Preliminary Data to Create a Contemporary Instrument for Randomized Clinical Trials [abstract]. Arthritis Rheumatol. 2019; 71 (suppl 10). https://acrabstracts.org/abstract/test-retest-reliability-and-validity-of-a-mobile-health-application-to-automate-the-30-seconds-chair-stand-test-preliminary-data-to-create-a-contemporary-instrument-for-randomized-clinical-t/. Accessed .« Back to 2019 ACR/ARP Annual Meeting

ACR Meeting Abstracts - https://acrabstracts.org/abstract/test-retest-reliability-and-validity-of-a-mobile-health-application-to-automate-the-30-seconds-chair-stand-test-preliminary-data-to-create-a-contemporary-instrument-for-randomized-clinical-t/