Session Information

Session Type: Poster Session C

Session Time: 10:30AM-12:30PM

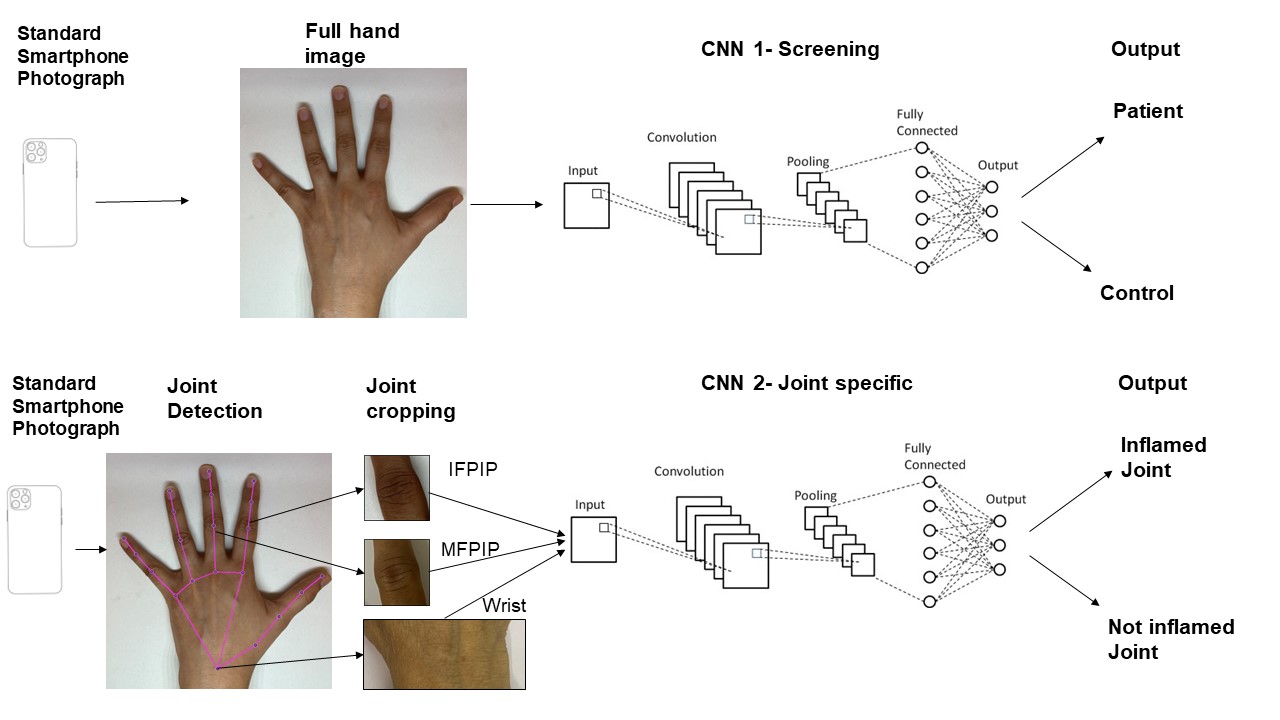

Background/Purpose: Convolutional neural networks (CNNs) have been used to classify medical images. We previously showed proof of principle that CNNs could detect inflammation in cropped photographs in three hand joints within a patient cohort. We studied the performance of the CNN on uncropped photographs of the hand in distinguishing patients from those without inflammatory arthritis as a screening step.

Methods: We studied consecutive patients with early (< 2 years) inflammatory arthritis and excluded those with deformities, controls from relative caregivers. All patients underwent a clinical exam for synovitis by one of two rheumatologists. Standardized (taken in a photo-box with white background and standard lighting) dorsal surface photographs of the hands were anonymised, and cropped to include individual joints. We utilized pre-trained CNN models, fine-tuning them with our dataset to enhance their specificity to arthritis-related features. We first used an Inception-ResNet-v2 backbone CNN modified for two class outputs (Patient vs control) on the uncropped photos of the hand.(fig 1) The dataset was divided into training and test sets in an 80:20 ratio. Similarly, Inception-ResNet-v2 CNNs were trained on cropped photos of three joints: Middle finger Proximal Interphalangeal (MFPIP), Index finger PIP (IFPIP) and wrist. The control group in joint specific evaluations include both healthy individuals as well as patients in whom that joint was not swollen. The performance of each model was evaluated based on accuracy, sensitivity, specificity both for the entire hand image and the cropped joint photos. We report typical representative values as each model run produces slight differences.

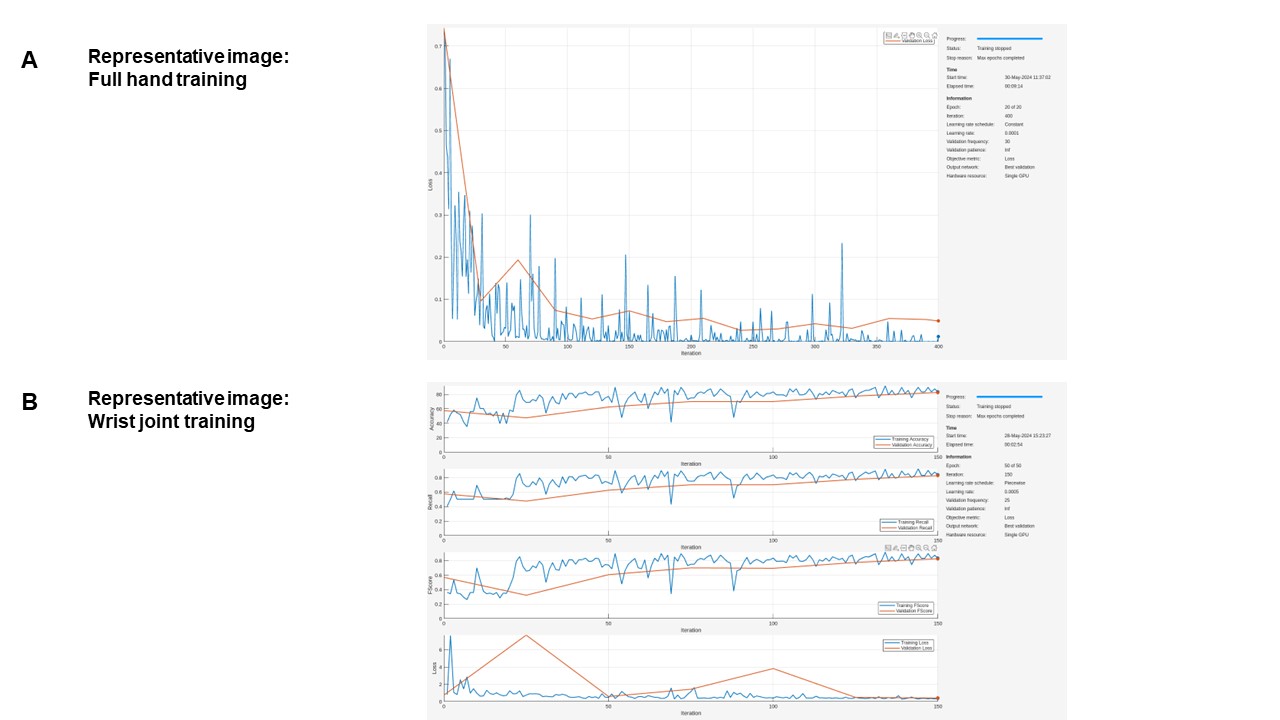

Results: We studied 216 controls (mean age 37.8 years) and 200 patients (mean age 49.5 years; 134 with rheumatoid arthritis, eight with viral arthritis, 13 with peripheral spondyloarthritis, 22 with connective tissue disease) Both hands were involved in 124 and 91 had polyarthritis ( >4 swollen joints). The wrist was the most common joint involved (173/400) followed by the MFPIP (134) and IFPIP (128). The whole-hand CNN achieved excellent accuracy (98%), sensitivity (98%) and specificity (98%) in predicting a patient as compared to control (representative image, fig 2 A). Joint-specific CNN accuracy, sensitivity and specificity were highest for the wrist (80% , 88% , 72%, representative image fig 2B ) followed by the IFPIP (79%, 89% ,73%) and MFPIP (76%, 91%, 70%).

Conclusion: We expand previous results to demonstrate that computer vision without feature engineering can distinguish between patients and healthy controls based on hand images with good accuracy. This could be used as a screening tool before using joint-specific CNNs to improve robustness. Future research will focus on validating these findings in larger, more diverse populations, refining models to improve specificity in individual joints and testing strategies of integrating this technology into clinical workflows.

To cite this abstract in AMA style:

Phatak S, Saptarshi r, Sharma V, Chakraborty S, Zanwar A, Goel P. Computer Vision on Standardized Smartphone Photographs as a Screening Tool for Inflammatory Arthritis of the Hand: Results from an Indian Patient Cohort [abstract]. Arthritis Rheumatol. 2024; 76 (suppl 9). https://acrabstracts.org/abstract/computer-vision-on-standardized-smartphone-photographs-as-a-screening-tool-for-inflammatory-arthritis-of-the-hand-results-from-an-indian-patient-cohort/. Accessed .« Back to ACR Convergence 2024

ACR Meeting Abstracts - https://acrabstracts.org/abstract/computer-vision-on-standardized-smartphone-photographs-as-a-screening-tool-for-inflammatory-arthritis-of-the-hand-results-from-an-indian-patient-cohort/