Session Information

Session Type: Poster Session A

Session Time: 9:00AM-11:00AM

Background/Purpose: Hand osteoarthritis (OA) is the second most common anatomic region for OA. The diagnosis and evaluation of hand OA heavily rely on subjective assessments conducted by experienced physicians, primarily utilizing clinical examinations and radiographs. However, the interpretation of radiographs is prone to considerable variability, leading to challenges in objectively assessing hand OA. This abstract presents the development of an automated approach using deep learning and convolutional neural networks (CNN) to localize joint hand centers and measure angles, offering a potential solution to the Joint Space Width (JSW) estimation and hand OA assessment.

Methods: We selected 3,557 hand X-ray images from the Osteoarthritis Initiative (OAI). Two readers performed manual annotations of the twelve finger joints on each hand, the distal interphalangeal (DIP), proximal interphalangeal (PIP), and metacarpophalangeal (MCP) joints. Each joint was enclosed within a bounding box measuring 180*180 pixels. To enhance the diversity of the training data for the machine learning model, we employed various image augmentation techniques, including rotation, flipping, scaling, cropping, and mosaic. The data was split into training, validation, and test sets in a 7 : 1.5: 1.5 ratio. Utilizing the YOLOv5 architecture, the model was trained for 100 epochs using stochastic gradient descent (SGD) as the optimizer. The model output provided each joint’s position in coordinate form, which calculate the rotation and inclination angles of the DIP, PIP, and MCP joints. Specifically, we connected the center points of the DIP and PIP joints to derive the slope as the rotation angle of the DIP joint. Similarly, by connecting the PIP and MCP joints, we obtained the slope representing the rotation angle of the MCP joint. The rotation angle of the PIP joint was computed as the average of these two slopes. Leveraging the joint positions and the computed angles, we selectively cropped image patches from the original hand X-ray images, ensuring the joints were vertically oriented. This vertical orientation is crucial for accurately calculating the joint space width in subsequent steps.

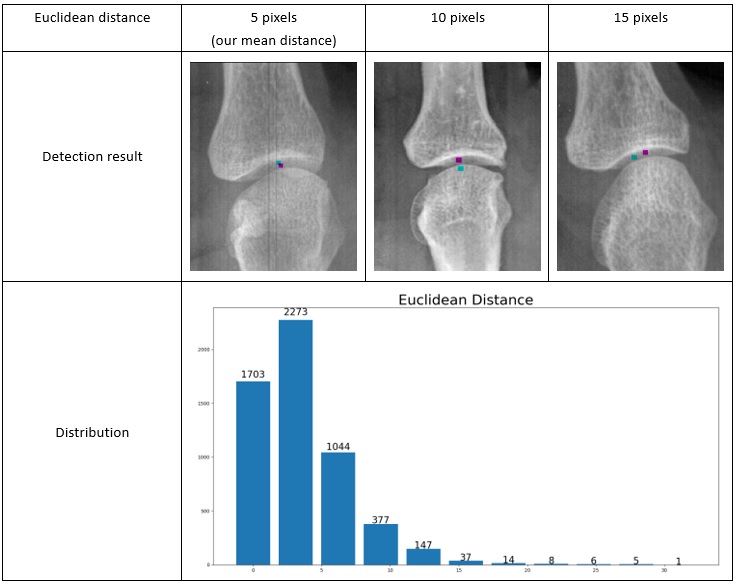

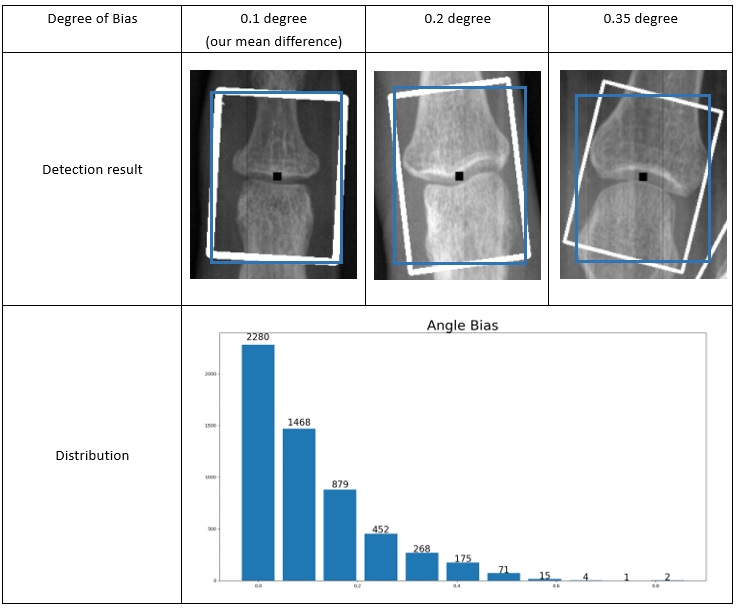

Results: In our evaluation process, our testing set has an average Euclidean distance (between the detection center and human annotation center) of 5.1 pixels (Figure 1). The mean error (absolute distance) in the test set for the angle estimation step is 0.144 degrees (Figure 2). Interestingly, we encountered 21 human annotation errors within the dataset during the initial training of YOLOv5, resulting in a human error rate of 0.5%, while the model successfully identified 5 out of the 6 human errors in the test set, leading to machine error rate of 0.2%. This indicates that our model exhibited a lower error rate than manual annotations.

Conclusion: Our deep-learning approach successfully detects each finger joint its rotation angle. Moreover, our model shows a lower error rate than manually labeling the joints. It also exhibits high accuracy and holds promise in reducing inter-observer variability during hand OA evaluation. In future studies, we intend to develop an automatic JSW detection algorithm and explore the correlation between clinical symptoms and JSW.

To cite this abstract in AMA style:

Gao Y, Shan J, Ponnusamy R, Blackadar J, Guida C, Driban J, McAlindon T, Zhang M. Automatically Detect Finger Joint Center and Angle on Hand X-ray: A Deep Learning Model [abstract]. Arthritis Rheumatol. 2023; 75 (suppl 9). https://acrabstracts.org/abstract/automatically-detect-finger-joint-center-and-angle-on-hand-x-ray-a-deep-learning-model/. Accessed .« Back to ACR Convergence 2023

ACR Meeting Abstracts - https://acrabstracts.org/abstract/automatically-detect-finger-joint-center-and-angle-on-hand-x-ray-a-deep-learning-model/