Session Information

Session Type: Poster Session C

Session Time: 10:30AM-12:30PM

Background/Purpose: The rheumatology medical fellowship application review process requires significant time from program directors and faculty who manually analyze dozens of multi-part applications each year. A typical year may yield ~200 applicants and require 50+ hours for fellowship program director(s) to pre-screen them and decide which should be offered interviews. Each application typically includes a 40-page PDF document and a structured Excel export from (ERAS) with > 2,500 columns of data per applicant. The possibility that artificial intelligence (AI) and large language models (LLMs) might concisely summarize fellow applications, protect applicant privacy, and highlight criteria to make subsequent interview decisions remains unexplored.

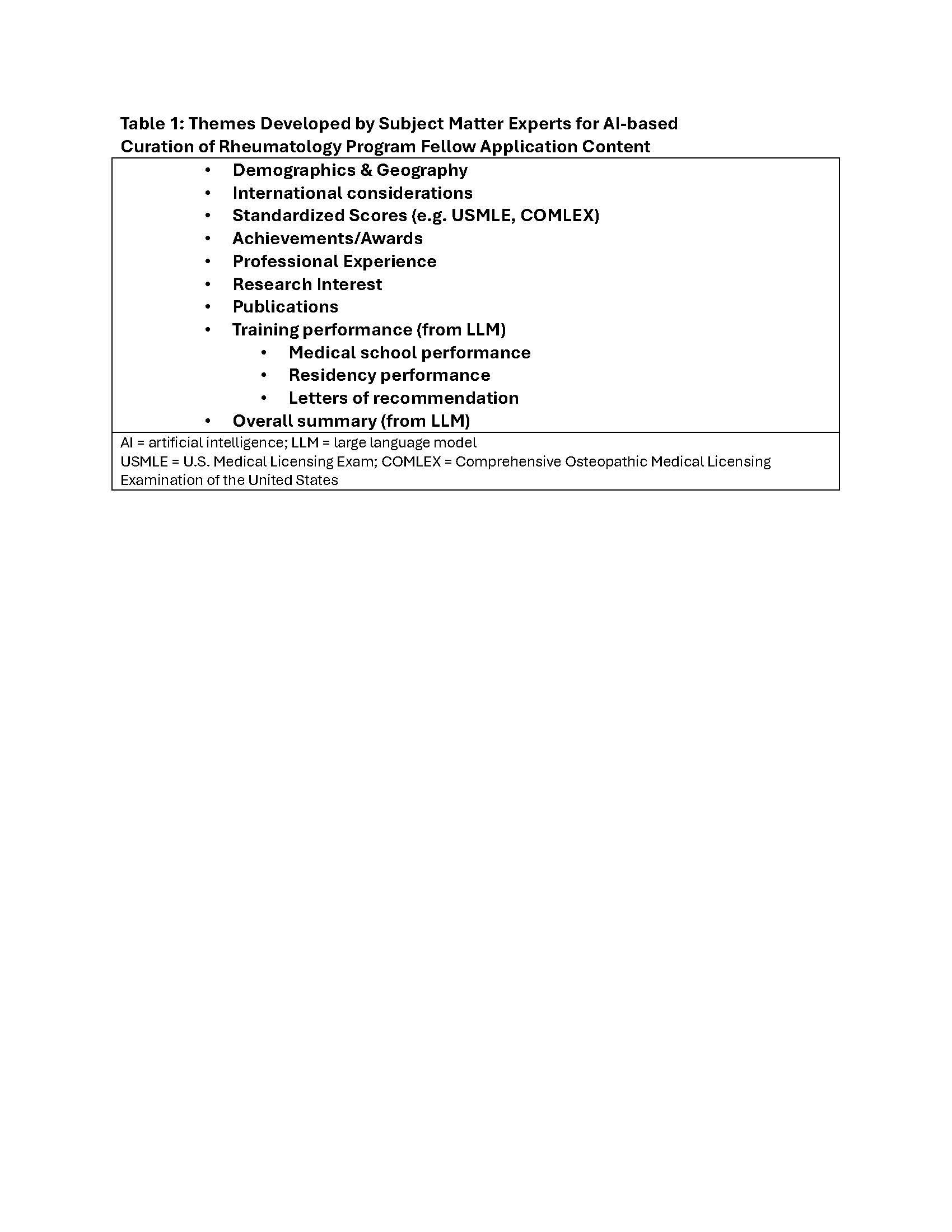

Methods: We developed an AI pipeline to help automate the fellowship screening process. It uses GPT-4o combined with GPU-accelerated optical character recognition (OCRs) to reduce manual review time. For each applicant, the two de-identified text files were concatenated and fed into an AI agent that uses GPT-4o to generate 1-2 page summaries aligned to specific feature domains (e.g., Clinical Potential, Awards, USMLE scores, Research Output) developed as part of the project and curated by subject matter experts (e.g. program directors and clinical faculty) in Table 1.The output of the pipeline was a concise narrative summary that reduced each ~40 page application to 1-2 pages. A GPT 4o-mini HIPAA-complaint Azure instance was then used to analyze a 169-document corpus of previously reviewed fellowship applications. Reduction in the amount of time for the narrative pre-review was the primary endpoint for evaluation. As a secondary metric, each applicant was scored on a scale of 1-100, where a score of at last 70 would receive an interview to align with institutional reviewing capacity. The scores were then compared to the manually annotated corpus, relating the scores to applicants who received interviews and those who did not receive interviews, versus what the model predicted.

Results: Across the 2017-2024 application cycles, and after implementation of the AI pipeline, the amount of manual effort to pre-screen fellow applicants for a single annual cycle (n = 180 fellow applicants) was decreased from approximately 50 hours to approximately 2 hours total The analysis finished in 2 minutes and 26 seconds using the Azure OpenAI API and is scalable to larger datasets. Overall accuracy compared to who was offered interviews was 0.69 with recall of 0.59 (Figure 1), with the confusion matrix shown in Figure 2.

Conclusion: Results indicate that this novel pipeline can assist with rheumatology fellowship screening and save dramatic amounts of human reviewer time. Ongoing refinement is underway to improve model performance compared to the both the interview outcome and subsequent program director and faculty reviews, and to make this tool more widely available.

To cite this abstract in AMA style:

Trotter A, Gaffo A, Alexander A, Osborne J, Curtis J. Automating the Curation and Summarization of Rheumatology Fellowship Applications using Generative AI Agents and Transformer Models [abstract]. Arthritis Rheumatol. 2025; 77 (suppl 9). https://acrabstracts.org/abstract/automating-the-curation-and-summarization-of-rheumatology-fellowship-applications-using-generative-ai-agents-and-transformer-models/. Accessed .« Back to ACR Convergence 2025

ACR Meeting Abstracts - https://acrabstracts.org/abstract/automating-the-curation-and-summarization-of-rheumatology-fellowship-applications-using-generative-ai-agents-and-transformer-models/

.jpg)

.jpg)