Session Information

Session Type: Abstract Session

Session Time: 3:45PM-4:00PM

Background/Purpose: Rheumatology fellows frequently prepare for board examinations using case-based, multiple-choice questions. However, there are few resources with enough questions to prepare and those currently available can be costly. In this pilot study, we aim to evaluate if artificial intelligence (AI) generated questions are suitable for use.

Methods: We provided 5 identical prompts to the free versions of both Gemini and ChatGPT1,2. Each prompt requested one board style question using an uploaded PDF of ACR guidelines including glucocorticoid induced osteoporosis, vaccination, interstitial lung disease, rheumatoid arthritis and perioperative management. The following is an example of one such prompt, “Using the 2023 American College of Rheumatology clinical practice guidelines for Interstitial Lung Disease create one rheumatology board style question using reasonable alternatives as detractors”. AI generated questions were arranged in two separate forms labeled Form 1 (Gemini) and Form 2 (ChatGPT) to blind the reviewer to which AI model created it. Ten rating metrics covering both accuracy and technical aspects of question writing were developed by an experienced question writer. Rating metrics included answer choices on a 5-point likert scale (ranging from strongly disagree to strongly agree) for 8 questions, yes/no choices for if the question was negatively worded, a 10-point scale for overall acceptability of the question and whether the Gemini or ChatGPT question was preferred. Three of the authors, who are board-certified rheumatologists engaged in medical education, independently rated questions on paper surveys. For each of the AI generated questions, the average rating metric of all three interviewers was calculated. This question average was used to calculate overall averages for each AI model. Assuming nonparametric data, Mann Whitney U was used to compare averages between models.

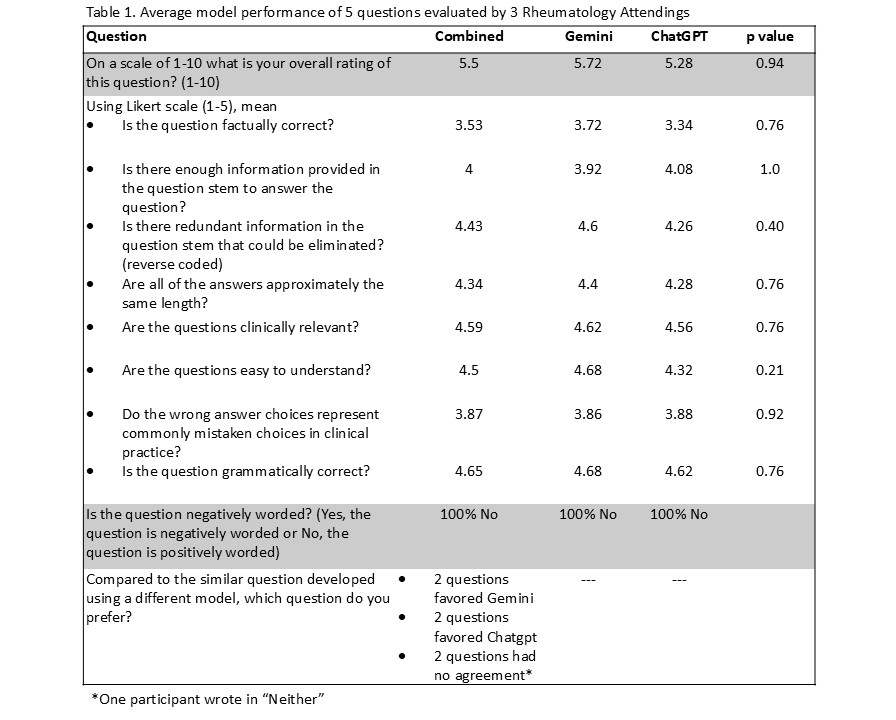

Results: Gemini and ChatGPT created 5 questions each with 4-5 answer choices per question. Attending rheumatologists rated these questions lowest on whether or not the question was factually correct (Combined 3.53 vs Gemini 3.72 vs ChatGPT 3.34) and if the wrong answer choices represented commonly mistaken choices in clinical practice (Combined 3.87 vs Gemini 3.86 vs ChatGPT 3.88). Technical aspects of question writing were the highest rated including if the question was negatively worded (100% No), and if the question was grammatically correct (Combined 4.65 vs Gemini 4.68 vs ChatGPT 4.62). The overall score out of 10 was low for both models (Combined 5.5 vs Gemini 5.72 vs open AI 5.28).

Conclusion: While both AI platforms created 10 grammatically correct board style questions and were rated highly on technical aspects of question writing, rheumatology attendings rated them poorly for correctness, relevant detractors and overall score. Learners should take caution when using AI to study by reviewing the original text or having experts assess questions for accuracy and validity. Improvements could be made with refining prompts and iterative modifications to the questions, however, that was not pursued in the current study.

To cite this abstract in AMA style:

Deffendall C, Annapureddy N, Byram K, Chew E, Reese T. Two Common AI Models Create Poor Rheumatology Board Style Questions [abstract]. Arthritis Rheumatol. 2025; 77 (suppl 9). https://acrabstracts.org/abstract/two-common-ai-models-create-poor-rheumatology-board-style-questions/. Accessed .« Back to ACR Convergence 2025

ACR Meeting Abstracts - https://acrabstracts.org/abstract/two-common-ai-models-create-poor-rheumatology-board-style-questions/